Advertisement

Governments worldwide are rushing to regulate artificial intelligence (AI) research and its use as it continues to skyrocket. This legislative drive towards regulation in the United States is beginning to emerge at the state level, where individual legislators are introducing legislation aimed at protecting people from the adverse effects of AI systems. Tech behemoths like Google and OpenAI are rebuffing, claiming that scattered state-level rules undermine innovation, impede competition, and create a patchwork of virtually impossible-to-negotiate compliance requirements.

Google and OpenAI contend that inconsistent and ineffective state-level AI rules result from Dealing with a web of conflicting regulations in many states—which is both expensive and operationally difficult for businesses creating models that span borders. An artificial intelligence model used in California may be considered unlawful in Texas or require different disclosures in New York. Not only do developers but also consumers, teachers, and companies that depend on these tools find uncertainty resulting from fragmentation.

Furthermore, both businesses underscore how overly strict or poorly defined policies could stifle creativity. Should companies and researchers encounter regulatory ambiguity or overly burdensome compliance requirements, they may cancel or postpone initiatives, thereby lowering the nation's competitive advantage in the global AI race. According to them, the secret to juggling innovation with accountability is a coherent national plan rather than many state policies. Google and OpenAI support government leadership in creating consistent, scalable guidelines applicable across sectors and regions.

From the standpoint of state legislators, the need for control stems from the pressing need to protect consumers from the actual and perceived dangers of artificial intelligence. These include algorithmic prejudice, data privacy invasions, employment displacement, spying, false information, and even problems with kid safety and mental health. States like California, New York, and Illinois have voluntarily created their frameworks to address these mounting issues in the absence of federal laws.

State authorities further contend that waiting for federal control may take years—time during which damage could go unchecked. Localized government, in their perspective, lets them customize laws to fit the requirements and values of their localities. Their approach helps them to hold digital corporations responsible and close a significant policy void. The industry's response to them seems more like opposition to control than a genuine interest in efficiency or creativity.

Prominent for creating ChatGPT and other foundational models, OpenAI has been outspoken about its preference for a centralized, federal approach to artificial intelligence governance. While avoiding the complexities of multi-jurisdictional compliance, its leadership has repeatedly emphasized in public speeches and testimony the necessity of a national framework that combines innovation with ethics. To direct ethical global development, OpenAI has also advocated for the concept of a worldwide AI monitoring organization modeled on the International Atomic Energy Agency.

OpenAI aims to provide a set of guiding ideas that are flexible enough to adapt to technological advancements but strong enough to ensure safety by supporting central supervision. It believes a broken landscape would lead to different safety policies and open doors that bad actors might find helpful. Moreover, centralization ensures that no single group dominates the conversation, allowing for a more unified communication among stakeholders—governments, scholars, civic society, and businesses.

AI is not an exception; Google has consistently funded lobbying and public policy initiatives related to digital technology. Arguing that overregulation—especially at the state level—could stifle innovation and undermine America's leadership in artificial intelligence, the business has cranked up its lobbying efforts in Washington and state capitals. Supported by clear federal rules rather than localized mandates, Google favors a strategy that emphasizes openness, voluntary commitments, and industry self-regulation.

Google has internally adopted AI Principles designed to guide the moral development and use of its products. Among these values are pledges to societal benefit, privacy, security, and justice. Google aims to demonstrate that the sector is capable of self-governance by highlighting these voluntary initiatives. Critics counter that legally enforced norms are necessary to ensure actual responsibility, particularly as artificial intelligence becomes increasingly ingrained in sensitive areas such as healthcare and education; volunteer ethics are insufficient.

State-level rules raise serious concerns about the potential for inhibiting cross-state innovation, thereby creating an unequal playing field. Businesses may decide against introducing new features in severely regulated areas, thereby denying consumers access to modern technologies. Inconsistent regulations may also discourage companies that lack the legal means to navigate challenging, multi-state compliance obligations. Ironically, this helps the same businesses these rules aim to control gain greater power, as the entrenchment of the dominance of significant internet giants results.

Legal uncertainty and delays in application might also result from state laws contradicting one another or with future federal legislation. A corporation could follow one state's privacy legislation, for instance, only to discover that another state's law contradicts it. Under these circumstances, legal disputes are almost certain to occur and consume time, money, and attention that could be better used for innovation and safety enhancements. In this sense, fragmentation poses a threat not just to industry but also to advancement.

Google and OpenAI have demonstrated, despite opposition, a willingness to collaborate with legislators on a measured approach to regulation. Both businesses have published safety protocols for public comments and attended government-led artificial intelligence conferences. There is growing agreement that some kind of guardrails—especially for models with great autonomy, general-purpose skills, or high-risk applications like banking, law, and healthcare—are essential.

Cooperative federalism—a concept wherein federal rules provide a baseline and states maintain the power to go beyond if necessary—may offer a workable answer. This framework would respect local issues and maintain invention. The creation of industry standards by organizations such as the National Institute of Standards and Technology (NIST), which may provide direction to federal and state authorities, presents even another interesting road. Close communication, mutual respect, and shared accountability for AI's impact on society will help bridge the divide between tech companies and legislators.

The conflict between Google, OpenAI, and state-level authorities marks a turning point in the broader narrative of artificial intelligence evolution rather than just a political standoff. The outcome of this argument will impact the development, management, and sharing of future technologies. Uniform global standards or a diversity of regional norms will affect innovation in any way. Before an AI-related crisis forces its hand, can governments and IT behemoths find common ground?

AI is a current and evolving presence in our lives, not just a general concept. Responsible control is not optional, as it is constantly changing across sectors and civilizations; it is, therefore, necessary. However, regulation must be clever, scalable, and cooperative to be successful. Legislators must grasp the technology they aim to regulate, and tech corporations must acknowledge their influence. Then, alone, will we be able to design a future in which artificial intelligence benefits rather than divides humanity?

Advertisement

How the AI-driven wireless tree network transforms wildfire defense by connecting forests with sensors and artificial intelligence to detect and predict fires in real time

How far can AI go when it comes to problem-solving? Google's new Gemini model steps into the spotlight to handle complex tasks with surprising nuance and range

Explore Apple Intelligence and how its generative AI system changes personal tech with privacy and daily task automation

Learn how to delete your ChatGPT history and manage your ChatGPT data securely. Step-by-step guide for removing past conversations and protecting your privacy

Learn how to boost sales with Generative AI. Learn tools, training, and strategies to personalize outreach and close deals faster

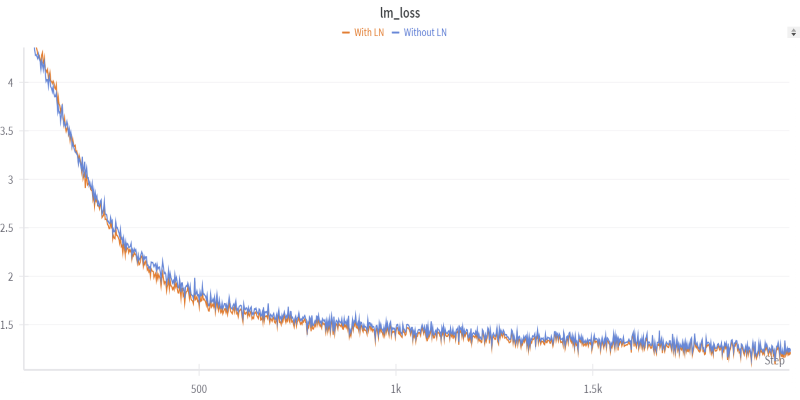

Want to shrink a large language model to under two bits per weight? Learn how 1.58-bit mixed quantization uses group-wise schemes and quantization-aware training

A Nvidia AI-powered humanoid robot is now serving coffee to visitors in Las Vegas, blending advanced robotics with natural human interaction in a café setting

Explore Airflow data intervals, scheduling, catch-up, and backfilling to design reliable workflows and optimize task execution

What loss functions are, why they matter, and how they guide machine learning models to make better predictions. A beginner-friendly explanation with examples and insights

Meta launches Llama 4, an advanced open language model offering improved reasoning, efficiency, and safety. Discover how Llama 4 by Meta AI is shaping the future of artificial intelligence

Delta partners with Uber and Joby Aviation to introduce a hyper-personalized travel experience at CES 2025, combining rideshare, air taxis, and flights into one seamless journey

Turn open-source language models into smart, action-taking agents using LangChain. Learn the steps, tools, and challenges involved in building fully controlled, self-hosted AI systems