Advertisement

In machine learning, there are two broad categories of methods for making predictions or decisions using data: lazy learning and eager learning. The two methods are not just a question of their internal dynamics, but are different philosophies of learning and adaptation. It is critical to explore these two types of learning in order to choose the right algorithm for your machine learning project. So, without any further ado, let’s have a look at the descriptions in this guide.

Lazy learning is a learning technique where the model does very little until predicting time. This term lazy might give the impression it's not efficient, but indeed it is a description of the algorithm's process. It waits basically until it has an instance to classify or predict before it performs computation. The k-Nearest Neighbors (k-NN) algorithm is one of the most well-known lazy learning algorithms.

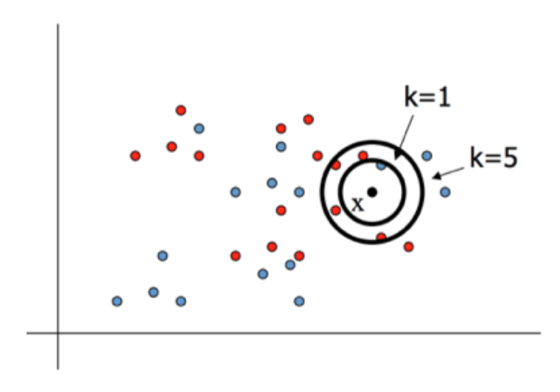

Lazy learning algorithms don't create an explicit model of the data. Rather, they retain the training data and make a decision by matching incoming test data against the retained data. In response to a new query or input, the algorithm searches for the nearest data points among the training set (based on some measure of distance, such as Euclidean distance) and makes the prediction based on these neighbors.

For instance, in k-NN, the prediction for a new data point is determined by the majority class among its nearest neighbors. If the problem is a regression one, it might instead be predicted based on the average or weighted average of the neighbors' values.

Simplicity and Adaptability: Lazy learning algorithms are easy to implement and can easily adapt to new data since no model is built during the training phase. This makes them highly flexible when handling data changes.

No Training Phase: Lazy learners don’t require a lengthy training phase, which means quicker setup and immediate use once the data is stored. This can be an advantage when dealing with fast-moving data or smaller datasets.

High Computational Cost at Prediction Time: Since lazy learners store all training data, making predictions can be computationally expensive, especially with large datasets. The algorithm must compare the new data point with every stored instance to make a decision.

Memory Usage: Storing the entire training dataset can be memory-intensive, particularly when the dataset grows in size.

Limited Generalization: Lazy learners may struggle to generalize well, particularly in noisy datasets, since they rely on specific instances rather than abstract models. This can lead to overfitting in some cases.

Eager learning algorithms, on the other hand, are more proactive. These algorithms build a model of the data during the training phase, and the model is used to make predictions when new data comes in. The model is constructed before the actual predictions begin, hence the term "eager."

One of the classic examples of eager learning algorithms is Decision Trees, where a tree-like structure is built using training data, and later, this structure is used to predict new instances. Other well-known eager learners include Support Vector Machines (SVMs) and Neural Networks.

Eager learning works by taking the entire training dataset and constructing a model. The idea is to learn from the data in such a way that the model can then make predictions for any new data without needing to reference the entire training set. The model-building process can involve finding patterns, structures, or features that help in distinguishing between classes or predicting outcomes.

For example, in decision trees, the algorithm works by recursively splitting the dataset based on feature values, aiming to minimize impurity (like entropy or Gini index) at each split. Once the tree is built, classifying a new sample is a matter of traversing the tree from the root to a leaf, which results in a prediction.

Fast Predictions After Model Building: Once the model is trained, eager learning algorithms can make predictions quickly. The model abstracts the data, enabling rapid decision-making without needing to reference the entire dataset.

Better Generalization: Eager learners typically excel at generalization since they create a model that captures the underlying patterns in the data, which reduces the risk of overfitting.

Efficient Use of Data: By focusing on key patterns during training, eager learners make better use of the data, especially in large and diverse datasets.

Expensive Training Phase: The process of building a model can be time-consuming and computationally expensive, especially with complex algorithms and large datasets. This initial setup can be a significant drawback for certain applications.

Less Flexibility with New Data: Once the model is built, eager learners are less flexible when new data deviates from the training data. This often requires retraining or updating the model to handle changes.

Risk of Overfitting: Without careful tuning, eager learning models can become overly complex, resulting in overfitting to the training data. This can impair their ability to perform well on unseen data.

Choosing between lazy and eager learning depends on the nature of your problem. If you have a dataset that changes frequently or is small enough that the training phase won't be an issue, a lazy learner might be appropriate. However, if your task involves a large dataset or if you need fast predictions once the model is trained, eager learning might be a better choice.

For instance, if you are working with a large amount of data where you expect many queries, eager learning methods like decision trees or support vector machines may be more appropriate, as they allow for fast predictions after the model is built. Conversely, if your data is relatively small and doesn't need to be frequently retrained, k-NN could serve you well with minimal upfront effort.

Both lazy and eager learning algorithms offer distinct advantages and challenges in machine learning. Lazy learning, with its simplicity and adaptability, is ideal for problems where the data changes frequently, but the trade-off is high computational cost at prediction time. Eager learning, on the other hand, excels when you need quick predictions after a one-time training process and are working with larger, stable datasets. Understanding the strengths and weaknesses of each will help you select the right approach for your machine learning projects.

Advertisement

Meta launches Llama 4, an advanced open language model offering improved reasoning, efficiency, and safety. Discover how Llama 4 by Meta AI is shaping the future of artificial intelligence

Learn how to delete your ChatGPT history and manage your ChatGPT data securely. Step-by-step guide for removing past conversations and protecting your privacy

Explore the key features, benefits, and top applications of OpenAI's GPT-4.1 in this essential 2025 guide for businesses.

Google’s Agentspace is changing how we work—find out how it could revolutionize your productivity.

Explore the real-world differences between Claude AI and ChatGPT. This comparison breaks down how these tools work, what sets them apart, and which one is right for your tasks

What happens when Nvidia AI meets autonomous drones? A major leap in precision flight, obstacle detection, and decision-making is underway

Can ChatGPT be used as a proofreader for your daily writing tasks? This guide explores its strengths, accuracy, and how it compares to traditional AI grammar checker tools

Discover the best Business Intelligence tools to use in 2025 for smarter insights and faster decision-making. Explore features, ease of use, and real-time data solutions

Discover how ByteDance’s new AI video generator is making content creation faster and simpler for creators, marketers, and educators worldwide

How to implement Policy Gradient with PyTorch to train intelligent agents using direct feedback from rewards. A clear and simple guide to mastering this reinforcement learning method

Tech giants respond to state-level AI policies, advocating for unified federal rules to guide responsible AI use.

Can AI actually make doctors’ jobs easier? Microsoft just launched its first AI assistant for health care workers—and it's changing the way hospitals function