Advertisement

Large Language Models (LLMs) are known for their size. Running them efficiently—especially outside high-end server clusters—means shrinking their weight without cutting out their ability to reason or generate coherent text. That's where quantization comes in. You’ve probably heard of 8-bit or even 4-bit quantization. But 1.58-bit? Now that’s pushing the boundary. And yes, it’s not just theory. It works—and works surprisingly well.

Let’s walk through what this level of compression means, why it’s a big deal, and how it’s done without tanking performance.

To make sense of this odd number, let's take a step back. Quantization reduces the precision of weights in a model. Instead of using 16- or 32-bit floating-point numbers, you use integers. Fewer bits per weight means less memory usage and faster computations.

Now, 1.58-bit quantization isn’t a new datatype—it’s a statistical target. You’re essentially averaging 1.58 bits per weight across the model. That’s achieved by mixing multiple weight groups with different bit sizes, often using techniques like grouped quantization or product quantization to bring the overall footprint down.

This level of quantization would seem like a guaranteed recipe for degraded performance. And yet, models remain surprisingly usable after it—if the quantization is done right.

At this point, you might be asking how LLMs, with their millions (or billions) of weights, manage to still reason, summarize, and write halfway decently when squashed down to this degree.

The answer lies in where the compression hits and how much of the structure remains intact. Not all parts of a model are equally sensitive to changes. You can aggressively quantize attention layers while preserving more detail in the feedforward layers—or vice versa—depending on the task.

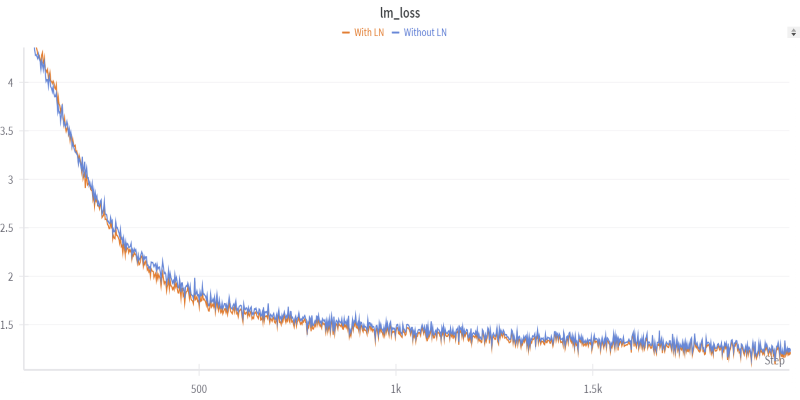

Also, quantization-aware training plays a role. Instead of training the model fully and quantizing afterward, you fine-tune it while simulating lower-bit weights. This lets the model adjust its parameters within the constraints of reduced precision.

Another trick? Using group-wise quantization with error compensation. You don't treat all weights the same. By assigning more bits to critical weights and fewer to those that don't affect output much, you preserve function without blowing up.

If you’re planning to try this yourself, here's a clear way to go about it.

This isn't the kind of thing you do from scratch. Use a well-trained model as your base. Popular options, such as LLaMA, GPT-J, or Mistral, are good starting points.

Download the checkpoint and make sure you can run it in its original form. You’ll want baseline outputs for comparison.

You're aiming for 1.58 bits on average. This often means mixing 2-bit quantization with sparser regions that are even lower in resolution. Product quantization, also known as group-wise quantization with mixed precision, is your best bet here.

Use tools like GPTQ or AWQ that let you simulate mixed-bit setups. Configure them to split the weights into groups and assign bit budgets accordingly.

Some groups might use 2 bits, others just 1. The idea is that the global average stays close to 1.58 bits per weight.

Before actual fine-tuning, calibrate the quantized model using a small dataset. This helps the quantizer understand value ranges and avoid rounding out important patterns.

You don’t need a massive dataset for this step—just enough to represent the kind of inputs the model is expected to handle. Think of it like setting the dials before a recording session.

Once calibrated, you move to fine-tuning. But not the usual float32 fine-tuning. You’re going to update weights while simulating their quantized versions.

This means using quantization-aware optimizers and ensuring gradient calculations respect the quantization scheme. You’re not updating the original high-precision weights—you’re updating them under the constraints of 1.58-bit representation.

Make sure your boss doesn't spike during this stage. If it does, consider revisiting the bit allocation or increasing regularization. Stability is key. Even a small jump in training loss can wreck downstream performance in a quantized model.

Once fine-tuning wraps up, run the model through a series of tasks. You want to know how much quality has been preserved.

Focus on latency, token accuracy, perplexity, and memory usage. Also, do some side-by-side comparisons. Take a few prompts and compare the 1.58-bit model output with the original model output. You'll likely see more compression artifacts in creative tasks but less so in structured ones, such as summarization or classification.

This level of quantization isn't for everything. But in the right context, it works wonders. Want to run an LLM locally on a laptop with limited RAM? Done. Deploying on-edge devices? This makes it practical. Need faster response time in a chatbot without major infrastructure? It's a solid option.

Models fine-tuned this way still perform well for structured outputs, simple Q&A, summarization, and form filling. You won’t use them for creative writing or nuanced long-form reasoning, but they’re more than enough for light inference tasks. It’s also handy for proof-of-concept demos, low-cost prototyping, and situations where latency matters more than perfection.

Compressing an LLM to 1.58 bits per weight used to sound like wishful thinking. But with careful planning, smart quantization, and a bit of fine-tuning, it’s become a workable strategy for real-world use.

You don’t need racks of GPUs to run a large model. With the right setup, you can shrink down a language model to the point where it fits on consumer hardware, without turning it into a garbled mess. And that’s a pretty exciting shift in how we think about deploying AI.

Advertisement

How the latest PayPal AI features are changing the way people handle online payments. From smart assistants to real-time fraud detection, PayPal is using AI to simplify and secure digital transactions

GenAI helps Telco B2B sales teams cut admin work, boost productivity, personalize outreach, and close more deals with automation

Turn open-source language models into smart, action-taking agents using LangChain. Learn the steps, tools, and challenges involved in building fully controlled, self-hosted AI systems

Discover strategies to train employees on AI through microlearning and hands-on practice without causing burnout.

Learn how to boost sales with Generative AI. Learn tools, training, and strategies to personalize outreach and close deals faster

Discover the top eight ChatGPT prompts to create stunning social media graphics and boost your brand's visual identity.

Explore Apple Intelligence and how its generative AI system changes personal tech with privacy and daily task automation

Discover why global tech giants are investing in Saudi Arabia's AI ecosystem through partnerships and incentives.

What happens when Nvidia AI meets autonomous drones? A major leap in precision flight, obstacle detection, and decision-making is underway

Want OpenAI-style chat APIs without the lock-in? Hugging Face’s new Messages API lets you work with open LLMs using familiar role-based message formats—no hacks required

AI in Travel is changing the way people explore the world. Discover 19 real examples of how artificial intelligence improves planning, customer service, pricing, and personalization in modern tourism

Want to shrink a large language model to under two bits per weight? Learn how 1.58-bit mixed quantization uses group-wise schemes and quantization-aware training