Advertisement

It’s not just about bigger models anymore—it’s about smarter ones. Google’s release of its new Gemini model signals a shift in how artificial intelligence approaches difficult, multi-layered problems. Rather than just focusing on scale or raw processing power, Gemini was built to think through things. That means handling tasks with multiple variables, switching between data types on the fly, and responding to nuanced user prompts with something more than a generic answer. It’s part of Google DeepMind’s broader strategy to move AI from a predictive tool to a real reasoning agent.

This version of Gemini isn’t just an upgrade—it’s a step away from old habits. Earlier AI systems often hit a wall when asked to handle logical reasoning, multi-step processes, or cross-domain knowledge. Gemini’s main strength lies in its ability to juggle all of that at once. This isn’t a language model pretending to understand—this is a system built to work through problems with structure and clarity. The timing matters too. With every major tech company chasing multi-modal AI, Gemini’s performance across video, audio, text, and code pushes the conversation past benchmarks and into real-world applications.

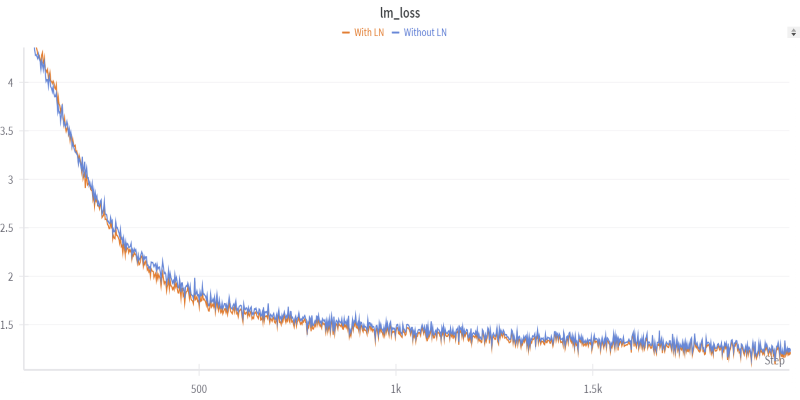

At the core of the new Gemini model is its training process, which diverges from traditional language modeling routines. Instead of feeding the system endless amounts of text and asking it to predict what comes next, Gemini was trained with a specific emphasis on reasoning and logic. That means it doesn’t just parrot facts or patterns—it actively builds context and weighs alternatives. When given a complex prompt involving math, code, or logic, Gemini shows improved consistency and fewer hallucinations than previous models in the same class.

Another key difference is how Gemini processes inputs. It doesn’t treat text, images, and audio as separate silos. It fuses them. For instance, if someone uploads a graph, a short voice note, and a few lines of text describing a scientific hypothesis, Gemini doesn't just respond in fragments. It takes all three formats into account at once to form a single, connected interpretation. This multi-modal integration is what sets it apart from models that bolt on vision or audio features as secondary tools.

The model also handles context length better than its predecessors. Many older models struggled to keep track of long conversations or documents, often dropping key context midway. Gemini shows better memory and attention over extended inputs, which makes it more reliable for long-form queries like technical troubleshooting, academic synthesis, or legal document analysis. These aren’t flashy demos—they’re practical uses that demand accuracy.

What's interesting about Gemini isn't just what it can do in theory, but how it's being tested out in everyday tools. Google is already integrating Gemini into its products, such as Search, Docs, and Gmail. In Search, it helps break down dense questions into digestible responses, often with better clarity than standard results. In Google Docs, it's being used to rewrite and restructure messy content, not just fix grammar. And in Gmail, it's nudging toward being more of a writing assistant than a template generator.

But the reach goes further than Google's platforms. Developers using the Gemini API have begun testing it for advanced customer support automation, tutoring systems, financial analysis, and even code debugging. Unlike other models that require extensive fine-tuning to work effectively in niche domains, Gemini can often perform with minimal retraining. That's mostly because it was built with a diverse dataset that includes logic-based problems, real-world reasoning examples, and cross-disciplinary questions.

In the field of education, the Gemini model is being explored for personalized learning assistants that can adjust the pace and complexity of their explanations based on a student’s past responses. Rather than pushing pre-written answers, it adapts in real time. In medical research, Gemini’s ability to synthesize data from academic papers, lab notes, and image-based diagnostics gives it an edge in assembling complex case summaries or suggesting next steps in treatment planning.

Even with these upgrades, Gemini's release doesn't make it perfect. Handling complex problems means facing unpredictable edge cases. In situations where ethical reasoning or cultural context is required, Gemini still has limitations. Like most models, it reflects the data it was trained on, and that includes subtle biases, occasional gaps, or skewed assumptions. Google has acknowledged these risks and states that it's building feedback loops and guardrails; however, in practice, oversight remains a concern.

Another issue is speed. Handling multi-modal, multi-step tasks often means higher computational requirements. While Gemini is efficient relative to its size, the infrastructure cost of running it at full tilt may limit accessibility for smaller teams or solo developers. There's also the question of transparency. How much of its reasoning is interpretable to the user? Right now, Gemini doesn't always explain how it reaches a conclusion, which could matter in legal, scientific, or academic settings where traceability is everything.

Despite these points, Gemini still marks a jump in how we frame AI’s role. It’s not a novelty tool or a chatbot. It’s meant to be a system that tackles hard questions—and doesn’t just stop at the first layer of answers.

Google’s new Gemini model isn’t just about more power—it’s about better thinking. Built to handle complex problems with logic and context, Gemini marks a shift from fast, surface-level responses to deeper, more structured reasoning. It blends text, images, audio, and code to solve real-world tasks that older models struggled with. Early signs from tools like Search and Docs show it’s more than hype. It won’t replace human thinking, but it’s getting better at supporting it. Gemini feels less like a flashy upgrade and more like a quiet redefinition of what useful AI can be.

Advertisement

Learn how to delete your ChatGPT history and manage your ChatGPT data securely. Step-by-step guide for removing past conversations and protecting your privacy

Explore Apple Intelligence and how its generative AI system changes personal tech with privacy and daily task automation

How Locally Linear Embedding helps simplify high-dimensional data by preserving local structure and revealing hidden patterns without forcing assumptions

Discover why banks must embrace innovation in compliance to manage rising risks, reduce costs, and stay ahead of regulations

Why analytics is important for better outcomes across industries. Learn how data insights improve decision quality and make everyday choices more effective

What happens when Nvidia AI meets autonomous drones? A major leap in precision flight, obstacle detection, and decision-making is underway

Dynamic Speculation predicts future tokens in parallel, reducing wait time in assisted generation. Here’s how it works and why it improves speed and flow

Meta launches Llama 4, an advanced open language model offering improved reasoning, efficiency, and safety. Discover how Llama 4 by Meta AI is shaping the future of artificial intelligence

Discover the top eight ChatGPT prompts to create stunning social media graphics and boost your brand's visual identity.

Want to shrink a large language model to under two bits per weight? Learn how 1.58-bit mixed quantization uses group-wise schemes and quantization-aware training

How to implement Policy Gradient with PyTorch to train intelligent agents using direct feedback from rewards. A clear and simple guide to mastering this reinforcement learning method

How the latest PayPal AI features are changing the way people handle online payments. From smart assistants to real-time fraud detection, PayPal is using AI to simplify and secure digital transactions