Advertisement

Not long ago, taking an NLP (Natural Language Processing) course meant learning about tokenization, part-of-speech tagging, named entity recognition, and maybe some sentiment analysis using simple classifiers or sequence models. You'd work with tools like NLTK or SpaCy, explore syntax trees, and gradually move toward word embeddings and recurrent neural networks. It was technical but manageable.

That landscape has shifted. The same courses are being overhauled—rewritten, restructured, and rebranded. The focus is no longer just NLP. It's LLMs. The NLP course is becoming the LLM course and is not a small update. It's a complete rewrite of what it means to study language with machines.

Traditional NLP leaned heavily on rules, statistics, and modest machine learning models. Early systems were built on manually curated rules and later evolved into probabilistic approaches like Hidden Markov Models and Conditional Random Fields. These systems required careful feature engineering. In the classroom, students were taught to dissect the text, build pipelines, and understand language grammar and structure through programs resembling linguistic toolkits more than general AI.

Then came neural networks. Word embeddings like Word2Vec and GloVe added context. Models started learning meaning instead of relying on hand-tuned patterns. Until then, courses followed a step-by-step build-up, from preprocessing to classification tasks, slowly integrating deep learning through LSTMs and GRUs.

Everything changed when Transformers arrived.

The 2017 paper “Attention Is All You Need” introduced the Transformer architecture, which discarded recurrence in favor of self-attention mechanisms. That single shift unlocked large-scale pretraining of language models that could understand text in richer, more dynamic ways. BERT and GPT models showed what was possible when massive datasets met deep architecture.

In less than five years, NLP was no longer about labeling parts of a sentence—it became about fine-tuning or prompting models that had already read half the internet.

Instructors face pressure from students and industry alike. The skills needed in today's workplaces revolve around prompt engineering, model deployment, and adapting pre-trained models to new tasks—not designing rule-based taggers from scratch.

University syllabi have started reflecting this. Introductory NLP is giving way to courses on LLMs (Large Language Models). The core curriculum covers pretraining objectives (masked vs. autoregressive), in-context learning, RLHF (Reinforcement Learning from Human Feedback), hallucination mitigation, and chain-of-thought reasoning.

What’s being taught isn’t just syntax or sentiment anymore. It’s model alignment, safety constraints, API fine-tuning, and multi-turn dialogue systems. These are not just different topics—they represent a different mindset. The model is no longer something you build from scratch; it’s something you shape, interpret, or control.

Even tools have changed. Hugging Face’s Transformers library has replaced NLTK. Instead of writing tokenizers, students work with token IDs, model configs, and CUDA setups. It’s not uncommon to see classes use OpenAI or Cohere APIs in their assignments. What used to be optional is now central.

This shift from traditional NLP to LLMs doesn’t mean the foundational material is obsolete. Concepts like attention, vector representations, and language structure still matter—but how they are taught and used has changed.

Students today must learn how to evaluate language models, probe for biases, handle hallucinated outputs, and create prompts that elicit reliable responses. They need to understand not just what the model is doing but why. This requires comfort with concepts like temperature, top-k sampling, and beam search, which weren't even on the radar a few years ago.

Practical know-how has become a larger part of the course. Assignments might involve comparing different models on downstream tasks, measuring perplexity, or even building retrieval-augmented generation (RAG) systems. Projects often go beyond sentiment analysis and aim at building mini chatbots, summarizers, or question-answering systems using APIs. The bar has been raised.

Importantly, ethics is no longer a footnote at the end of the semester. With LLMs being deployed at scale, questions around bias, privacy, misinformation, and societal impact are now integrated into the curriculum. Students must learn to be responsible users and developers of these technologies.

The scale of these models also means that computing infrastructure is now part of the discussion. Cloud platforms, GPUs, latency considerations, and memory footprints matter. You don’t just run a model—you deploy and monitor it.

The shift from NLP to LLMs is not just academic. It reflects a larger change in how we think about language and intelligence. NLP was about trying to teach machines the structure of language. LLMs flip the equation. We now start with models that have absorbed immense amounts of text and ask them to behave as if they understand language. In many ways, they do.

The consequence is that courses are no longer about building narrow tools but managing broad capabilities.

This means adapting to a new kind of literacy: understanding what large models are good at, where they fail, and how to shape their behavior. The job is different, whether that's through fine-tuning, prompting, or post-processing.

The underlying technology has become more powerful and opaque. Teaching it requires blending engineering with interpretation. It’s not enough to know how a model works—you have to know how it behaves in the real world.

Students trained in this new wave of LLM courses are stepping into a space where language understanding is not defined by parsing trees but by emergent capabilities. The course has changed because the questions have changed. We no longer ask, “How do I extract entities?” We ask, “Can this model write a policy memo?” Or, “What happens if the model gives false information?” These are broader, deeper, and more consequential questions.

The shift from NLP to LLM courses marks a clear change in focus, tools, and expectations. Students now work with advanced models, tackling real-world tasks and ethical concerns. These courses reflect a broader move toward understanding and guiding large-scale language systems. Learning how to work with LLMs has become central as the field evolves. This transformation isn't just academic—it's reshaping how we teach machines to use language.

Advertisement

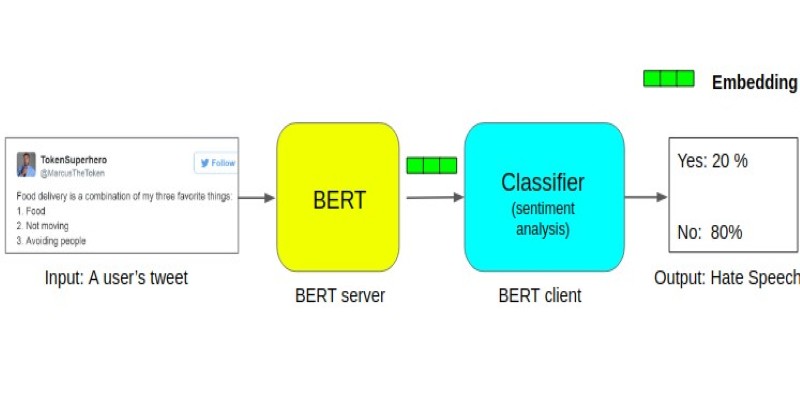

Learn about the BERT architecture explained for beginners in clear terms. Understand how it works, from tokens and layers to pretraining and fine-tuning, and why it remains so widely used in natural language processing

Discover how AI reshapes contact centers through automation, omnichannel support, and real-time analytics for better experiences

How Locally Linear Embedding helps simplify high-dimensional data by preserving local structure and revealing hidden patterns without forcing assumptions

How the latest PayPal AI features are changing the way people handle online payments. From smart assistants to real-time fraud detection, PayPal is using AI to simplify and secure digital transactions

Understand what machine learning (ML) is, its major types, why it is so important, how it works, and more in detail here

How to write a custom loss function in TensorFlow with this clear, step-by-step guide. Perfect for beginners who want to create tailored loss functions for their models

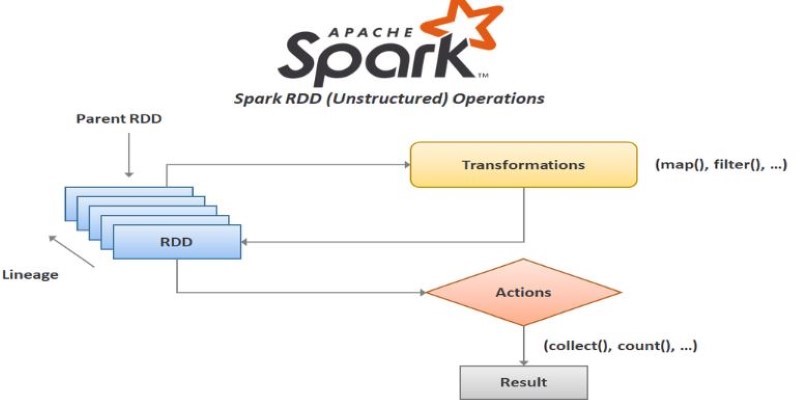

How to create RDD in Apache Spark using PySpark with clear, step-by-step instructions. This guide explains different methods to build RDDs and process distributed data efficiently

How Salesforce’s Agentic AI Adoption Blueprint and Virgin Atlantic’s AI apprenticeship program are shaping responsible AI adoption by combining strategy, accountability, and workforce readiness

Discover how ByteDance’s new AI video generator is making content creation faster and simpler for creators, marketers, and educators worldwide

Dynamic Speculation predicts future tokens in parallel, reducing wait time in assisted generation. Here’s how it works and why it improves speed and flow

Learn how to delete your ChatGPT history and manage your ChatGPT data securely. Step-by-step guide for removing past conversations and protecting your privacy

Can ChatGPT be used as a proofreader for your daily writing tasks? This guide explores its strengths, accuracy, and how it compares to traditional AI grammar checker tools